Ask someone to draw a car and the shape will probably be quite similar: a windscreen, four wheels, and doors.

While the external appearance of a car may remain largely unchanged, internal technologies have evolved significantly, transforming the characteristics and capabilities of modern vehicles. Where we once spoke about horsepower, speed, and fuel consumption, today we are more likely to define vehicles in technological terms.

This technological shift has introduced the concept of the software-defined vehicle (SDV). An SDV is a vehicle where software plays a central role in its operation and functionality beyond its traditional mechanical systems. An SDV’s performance is measured by how well it can sense, interpret, and react to the physical world in real time.

SDV’s integrate hundreds, if not thousands, of embedded of sensors across multiple types – accelerometers, gyroscopes, pressure, environmental sensors, cameras and radar. Combined, these create a comprehensive sensing mesh throughout the vehicle that collects data and feeds the vehicles processing systems. These building blocks enable safe, comfortable, efficient, and increasingly autonomous mobility.

To date, the transition from ‘traditional’ cars to SDV’s has been built on the intersection of two parallel areas of innovation. The first is the growing intelligence inside the car through in-cabin monitoring; the second is the expanding awareness outside the car enabled by radar, lidar, and connected infrastructure.

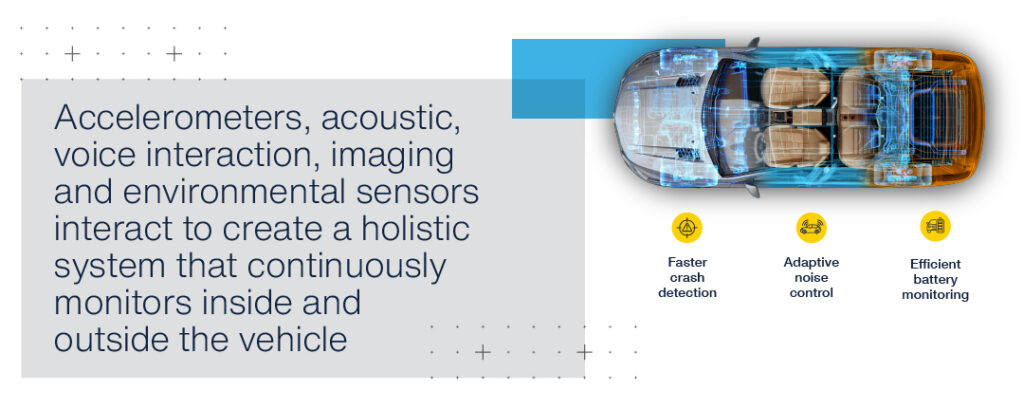

The ‘third’ stage will be more holistic – the vehicle itself will become a real-time sensing platform that is continuously aware of its environment, motion, and internal state. For example, sensors will detect road conditions during a rainy night, adjusting braking and steering to ensure safety, or monitor battery pack temperature patterns to identify early signs of degradation, preventing costly failures. Perception, not propulsion, is the metric by which cars will be measured in the future.

The foundation for this transformation is large-scale sensing which will enable vehicles to make smarter decisions, such as detecting nearby pedestrians in low visibility or optimizing power usage based on real-time driving conditions.

The sensing-to-decision pipeline

As vehicles become more software-driven, competitive advantage in automotive is shifting. The leading edge of this industry belongs to those who are integrating the full sensing-to-decision pipeline.

This pipeline begins with sensors embedded throughout the vehicle, extends through edge processing and AI inference – in other words, local processing inside the vehicle -and culminates in software-defined actions, such as braking, steering, power management, infotainment, and safety systems. For instance, edge AI can process data from cameras and radar to detect a cyclist approaching from the side and initiate braking faster than a human could react. Control over this chain determines how quickly a vehicle reacts, how reliably it operates, and how it can be enhanced with additional intelligence.

The industry’s move toward zonal architectures amplifies this dynamic. Zonal architectures group sensors and controllers by physical location. In contrast, sensors are deeply integrated locally with electronic control units (ECUs). This creates shorter, more efficient sensor-to-action pathways and speed increases in critical functions and returning data to central computing resources, where more complex algorithms process combined sensor data.

In this context, sensors are no longer commoditized components. They are strategic assets. Organizations that deliver accurate sensing data at scale will gain significant leverage over the entire vehicle experience.

Sensors that deliver high-fidelity data

SDVs promise continuous improvement in three dimensions – software updates, centralized compute, and zonal architecture.

Software alone cannot define a vehicle. To function meaningfully, SDVs require a constant stream of accurate, high-fidelity physical data about their environment and surroundings.

This is where sensors become indispensable. Acting as the nervous system of the SDV, these sensors translate physical phenomena – such as pressure, acceleration, vibration, sound, temperature, and motion – into digital signals that software can interpret and act upon.

These digital signals include accelerometers that detect motion and vehicle dynamics; pressure sensors which monitor tires, airbags, and battery packs; acoustic sensors which enable road noise cancellation and voice interaction; and imaging and environmental sensors which capture visibility, proximity, and air quality. These inputs feed into the core operating system and edge AI models that process data locally in real time, supporting decisions that cannot wait for cloud processing.

As sensing, edge processing, and AI models improve, the groundwork is being laid for even higher levels of vehicle autonomy.

Toward adaptive, context-aware vehicles

The way we view cars is changing as sensing becomes ubiquitous and software becomes central.

Vehicles are moving toward context-aware systems that understand motion, environment, and internal conditions simultaneously. For example, a context-aware vehicle can detect icy road conditions and adjust its traction control system; or monitor driver attentiveness and issue alerts if fatigue is detected. Beyond autonomous driving alone, they will adjust behavior and characteristics based on road quality, weather, driver attentiveness, and battery health.

Perhaps most importantly, this sensing-led architecture creates a platform for continuous learning. Each journey becomes a feedback loop, improving how the vehicle perceives and responds over time.

In making this a reality, perception is the essential foundation. Sensing is not an accessory to software-defined vehicles; it is the basis on which all other functions are built. For example, perception enables predictive maintenance by identifying unusual vibration patterns in the drivetrain or enhances safety by detecting nearby vehicles in blind spots. Maintenance will become predictive instead of reactive. Comfort will be personalized dynamically, not preset manually. Hazards will be anticipated based on contextual data, rather than addressed only in the moment.

Realizing this vision demands not only advanced sensing capabilities but also comprehensive security. As vehicles become dense networks of connected sensors feeding AI-driven decision systems, cybersecurity shifts from a peripheral concern to a foundational requirement. Every sensor, every zone controller, and every data pathway must be hardened against security threats – because in a perception-driven vehicle, compromising the sensors means compromising the vehicle itself.

The vehicle is evolving into a living system, defined by its ability to sense the world and make intelligent decisions about what to do next.

For more information, please visit st.com