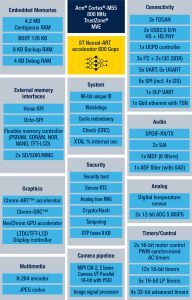

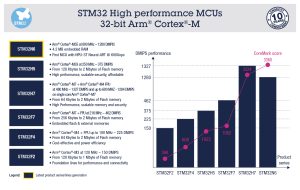

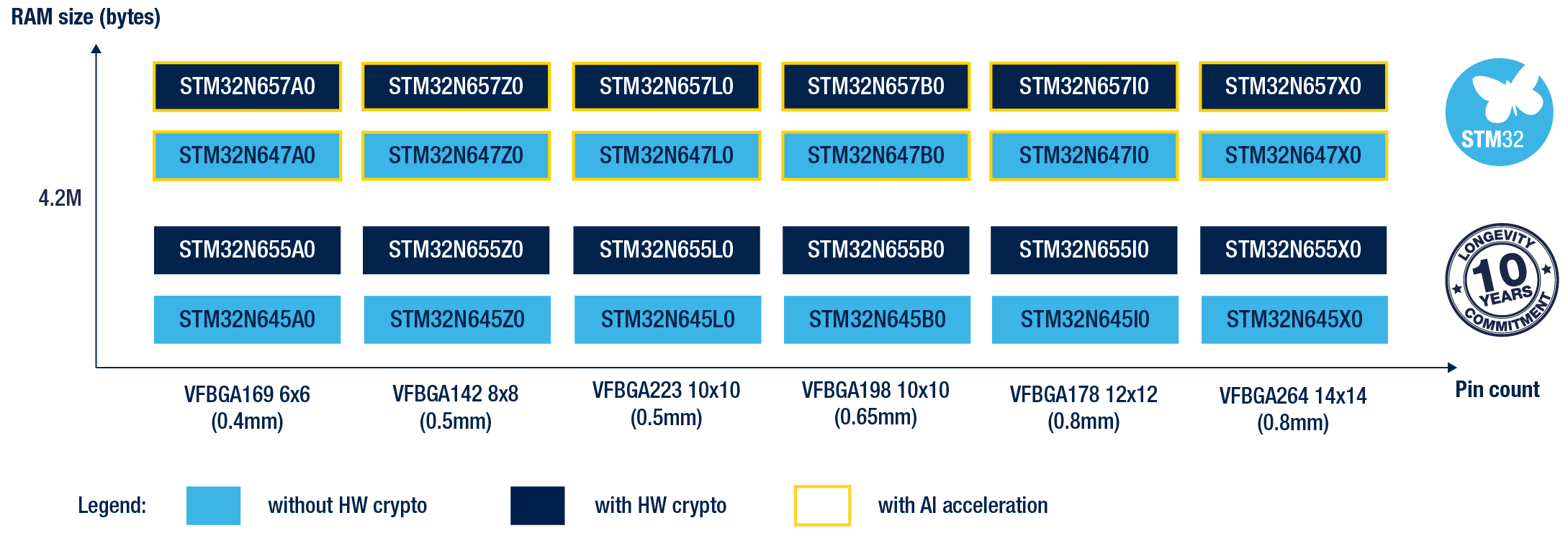

The STM32N6 is our newest and most powerful STM32 and the first to come with our Neural-ART Accelerator, a custom neural processing unit (NPU) capable of 600 GOPS, thus allowing machine learning applications that demanded an accelerated microprocessor to now run on an MCU. It’s also our first Cortex-M55 MCU and one of the few in the industry to run at 800 MHz. Additionally, at 4.2 MB, the STM32N6 includes the largest embedded RAM on an STM32. It’s also our first device to include our NeoChrom GPU alongside an H.264 hardware encoder. Consequently, given all of its capabilities, ST will also offer a general-purpose version of the STM32N6 without an NPU to make it even more accessible.*

Table of Contents

What is the state of machine learning at the edge?

Democratizing AI on MCUs

The idea of running a machine learning application at the edge is far from new. ST helped popularize it when we launched STM32Cube.AI in 2019. The software solution converts a neural network into optimized C code for STM32 microcontrollers, thus ensuring great performance despite processing and memory constraints. It has enabled the creation of applications such as fault recognition on 3D printers and other anomaly detection and classification that ST has made more accessible thanks to tools like NanoEdge AI Studio. Nevertheless, many neural network algorithms are too demanding for a classic microcontroller, which, up until now, meant switching to a microprocessor with an NPU.

The inevitability of AI on MCUs

The challenge is that no matter how great a microprocessor gets, some integrators will always need a microcontroller. Reasons are varied, from the simpler bill of materials to the use of a real-time operating system or the lower power consumption. Regardless, AI at the edge will only become ubiquitous when it becomes more accessible on all embedded systems. Consequently, the industry is coming together to bolster AI on microcontrollers and more MCUs are adopting an NPU. However, these neural processing units tend to be very similar, if not identical. ST, though, chose a different path. Indeed, we took the unique approach of designing an NPU in-house.

STM32N6: Why a custom NPU?

The backstory of the Neural-ART Accelerator

Today, we can divulge that our teams have been working on the ST Neural-ART Accelerator since 2016. The STM32Cube.AI software solution that we released in 2019 was directly influenced by the research and development conducted on the ST Neural-ART Accelerator at the time. Then, as the industry adopted STM32Cube.AI and we saw how engineers were using our solutions to create innovative edge AI products, we tweaked our Neural-ART Accelerator to release something unique. No other general-purpose MCU maker has a hardware and software ecosystem for AI at the edge that’s as profoundly customized and optimized.

The uniqueness of the Neural-ART Accelerator

Concretely, the Neural-ART accelerator in today’s STM32N6 has nearly 300 configurable multiply-accumulate units and two 64-bit AXI memory buses for a throughput of 600 GOPS. That’s 600 times more than what’s possible on our fastest STM32H7, which doesn’t feature an NPU. This breakthrough architecture not only allows for more operations per clock cycle and optimized data flow to prevent bottlenecks but is also optimized for power consumption, delivering an impressive 3 TOPS/W.

ST also ensured that its Neural-ART Accelerator supported more AI operators at launch than what’s common in the industry. The new STM32N6 is already compatible with the largest number of AI operators from TensorFlow Lite, Keras, and ONNX, and we are committed to continuously increasing the number of supported operators in the future. Additionally, the ability to use the ONNX format means data scientists can use the STM32N6 for the broadest range of AI applications. Put simply, we didn’t only want to ship more optimized hardware than competing solutions but a more accessible platform that would allow developers to use their current workflow and reduce their time to market.

STM32N6: What makes it a flagship MCU?

A powerful Cortex-M55

The STM32N6 is, first and foremost, an STM32. In fact, it’s ST’s most powerful STM32, largely because it’s our first microcontroller to use a 16 nm FinFET process node. By adopting this lithographic technology, we ensured we could run the Cortex-M55 at 800 MHz. It also enabled us to embed the largest amount of RAM on an STM32 while including numerous IPs. The STM32N6 also comes with a Gigabit Ethernet module with support for time-sensitive networking, six SPI and two I3C interfaces, two 12-bit ADCs, four 32-bit advanced timers, and more.

A unique camera pipeline

As we anticipate customers to use the STM32N6 with cameras in machine vision applications, thanks to its NPU and overall performance, we included our newest image signal processor (ISP), the same found on the STM32MP2 and compatible with the STM32 ISP IQTune software. The software ensures developers don’t need to hire expensive third-party service providers to tune the ISP to the CMOS sensor, lens, lighting conditions, and more. The STM32N6 also supports MIPI CSI-2, so the STM32N6 supports the most popular camera interface on mobile applications without needing an external ISP compatible with this particular camera serial interface. Hence, the STM32N6 can more readily process images from multiple image sensors and future-proof a system.

A 2.5D GPU, lots of embedded RAM, and an H.264 encoder

Furthermore, the STM32N6 offers a unique memory configuration for GUI developers. The 4.2 MB of RAM of the STM32N6 could store a double frame buffer for a 1280 x 800 display. The STM32N6 also comes with Octo and Hexa SPI flash interfaces to fetch assets in the external flash, with asset caching in external RAM, without risking a bottleneck.

The STM32N6 also embeds our NeoChrom GPU, an H.264 encoder, and a JPEG encoder and decoder. Combined with its large embedded RAM and fast flash interfaces, the new flagship MCU enables new applications like security panels with rich UIs and video streaming from camera sensors. It also means that developers can now envision running a neural network in conjunction with a GUI without having to use multiple MCUs since the NPU, the GPU, and multimedia encoders and decoders can offload the processor, thus enabling a lot more capabilities on one single device.

A comprehensive ecosystem of tools and partners

The fact that we customized the Neural-ART Accelerator and that we are so close to the metal of the STM32N6 means that ST can provide a comprehensive ecosystem of software tools that greatly facilitate and optimize the creation of new AI-enabled applications with the STM32N6. It starts with ST Edge AI Suite, a repository of free software tools, use cases, and documentation that help developers create AI for the Intelligent Edge, regardless of their experience level. ST Edge AI Suite also includes tools like the Edge AI Developer Cloud, which features dedicated neural networks in our STM32 model zoo, a board farm for real-world benchmarking, and more.

ST’s efforts ensure that engineers can work with existing frameworks. That’s why we developed a core technology (ST Edge AI Core) for optimizing and converting neural networks from popular AI frameworks to take full advantage of the Neural-ART accelerator. Additionally, our ecosystem also aims to bolster collaboration with numerous hardware and software partners, and we are continually working on integration into wider ecosystems. For instance, developers can use STM32Cube.AI in conjunction with NVIDIA TAO Toolkit, the AWS STM32 ML at the Edge Accelerator, or experience our model zoo on Hugging Face, which is hosting an increasing number of STM32 AI content.

How to get started?

The STM32N6 is a new milestone because, as the NPU, GPU, embedded RAM, Cortex-M55, peripherals, and other specifications show, it transforms high-performance edge AI applications on MCUs by enabling new use cases, such as computer vision, audio processing, and more. Even without its NPU, the STM32N6 still wins the crown of flagship STM32 by elevating advanced video and multimedia applications, allowing for enhanced UX and complex UIs that previously demanded dedicated and more expensive MCUs. In a nutshell, the STM32N6 inaugurates a new era of computing by making high-end and edge AI applications more accessible and approachable.

The best way to participate in this revolution is to grab one of the STM32N6 development boards already available. We are currently offering a Nucleo board and a Discovery Kit, and have just published STM32CubeN6, a dedicated software package with middleware and example code. We have also updated our ST Edge AI Suite to support the new device and TouchGFX Designer comes with Board Support Package for the kit to ensure developers can use the new device rapidly and create impressive UIs. We are also thrilled to announce that third-party development boards featuring the STM32N6 will arrive at a later date.