ST and AWS (Amazon Web Services) are featuring AWS STM32 ML at the Edge Accelerator, an application example that uses our B-U585I-IOT02A Discovery Kit, our STM32Cube.AI Developer Cloud, and AWS’ solutions to run an audio classification model on an STM32U5. LACROIX, a member of the ST Partner Program, is already looking to use this application in smart cities. On our side, we’ll continue to add similar machine-learning applications to our GitHub in 2024. The introduction of this application at the AWS re:Invent conference also shows how technologies ST announced at the beginning of 2023, like our Model Zoo and Board Farm, are opening the door to new collaborations and greater AI at the edge.

What does it take to create an audio event detection application?

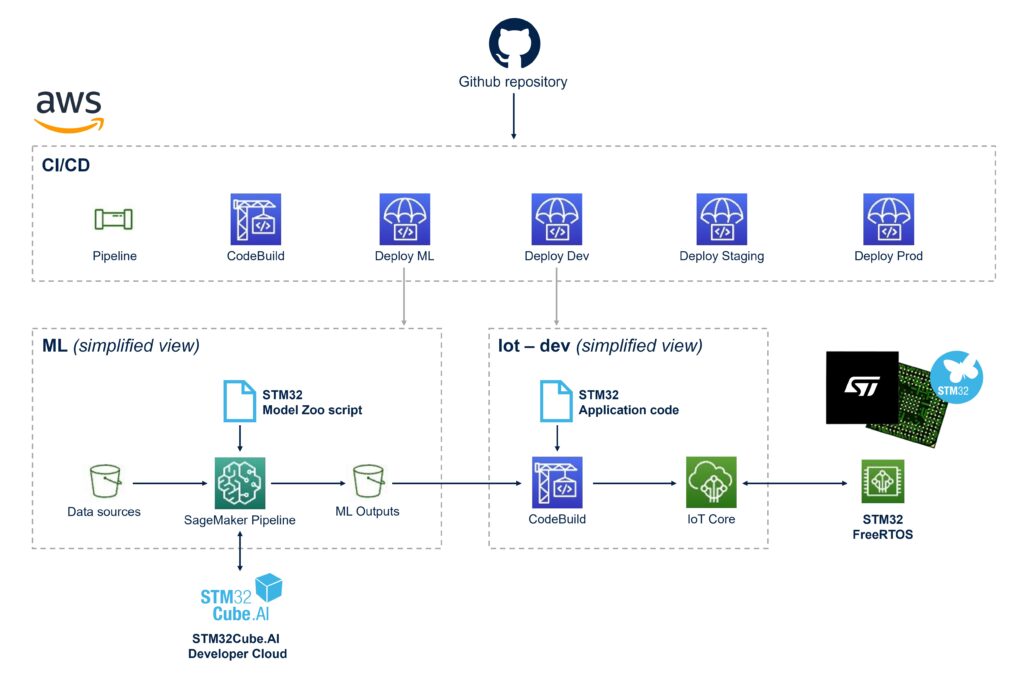

Our solution uses YAMNet-256, an Audio Event Detection model found in the ST Model Zoo (more on that later) deployed on the B-U585-IOT2A Discovery Kit. To ensure seamless cloud connectivity, we utilized X-CUBE-AWS, an extension pack that integrates FreeRTOS with AWS IoT Core. Consequently, our architecture supports the entire MLOps process. Indeed, the machine learning stack is responsible for data processing, model training, and evaluation, while the IoT stack handles automatic device flashing with OTA updates. It ensures that all devices are running the latest firmware security patches.

The pipeline stack manages the orchestration of the CI/CD (Continuous Integration / Continuous Delivery) workflow. This is a critical concept in development and operations (DevOps) as it ensures developers always update their work, optimize their code, and improve their applications. When working with neural networks, the ability to tweak a system to improve its accuracy is paramount. Consequently, developers automate the deployment of the ML and IoT stacks as they manage the entire development lifecycle of their solution.

Finally, for device monitoring and data visualization, we used Amazon Grafana to create dynamic and interactive dashboards for real-time monitoring and analysis. AWS STM32 ML at the Edge Accelerator is thus gratifying because we’ve been hammering the benefits of machine learning at the edge since we launched STM32Cube.AI in 2019. And the industry is clearly moving toward greater adoption of AI on microcontrollers for all sorts of applications. In this instance, developers use a version of the Yamnet audio classification model optimized for our STM32 MCUs to run an audio event detection program. After training the neural network, the system can distinguish between a wide range of noises, from dogs barking to someone sneezing or a rooster, and more.

What did it take to make it happen today?

X-CUBE-AWS

Even before delving into the machine learning aspect of the demonstration, the fact that we released X-CUBE-AWS was one of the reasons that brought AWS to work with our STM32U5 IoT Discovery Kit. Indeed, the software package contains a reference integration of FreeRTOS™ with AWS IoT that easily and securely connects to the AWS cloud. Developers can thus spend time on the AI itself rather than figure out how to manage the secure connectivity to a remote server. We even provide a reference implementation based on STM32Cube tools and software, which will further simplify IoT designs leveraging the rest of the STM32 ecosystem.

STM32 AI Model Zoo and STM32 Board Farm

The STM32 AI Model Zoo and STM32 Board Farm are two features we launched with the STM32Cube.AI Developer Cloud, and the fact that we are the only MCU maker offering such technology also explains our presence at AWS re:Invent. Indeed, by using the audio model from our Model Zoo, developers already benefit from an optimized algorithm. Teams working on a final product will further prune their neural network and increase performance. However, when trying to gauge their needs, perform a feasibility study on a microcontroller, or come up with a proof-of-concept, engineers can trust the version in our Model Zoo will run efficiently on STM32 devices.

The STM32 Board Farm, accessible through the STM32Cube.AI Developer Cloud online platform, enables integrators to benchmark their neural network on a wide range of STM32 boards to determine their best price-per-performance ratio immediately. Before the Board Farm, developers bought boards and compiled a separate application for each device, making the process cumbersome and time-consuming. With the STM32Cube.AI Developer Cloud, users upload the algorithm and run it on real hardware boards hosted in the cloud. The solution shows them inference times, memory requirements, and optimized mode topology on various STM32 microcontrollers. Hence, beyond the AWS STM32 ML at the Edge Accelerator, we are offering a new way of approaching AI on MCUs to make the process more straightforward.

AWS ecosystem

The new demo code on GitHub will resonate with many teams because it uses various Amazon technologies. For instance, thanks to AWS SageMaker, developers can retrain a neural network more easily to take advantage of a new dataset. Similarly, the AWS Cloud Development Kit will help deploy new firmware to the existing fleet, while Grafana provides a dashboard and analytical tools for real-time monitoring. Put simply, Amazon is presenting AWS STM32 ML at the Edge Accelerator at its conference because it shows how the ST-Amazon collaboration can help the industry create and deploy powerful edge AI systems when combining its technology with ours.

AWS STM32 ML at the Edge Accelerator: What will it yield tomorrow?

Future ST applications

The AWS STM32 ML at the Edge Accelerator is a symbolic first step for ST as we plan to release additional AI application examples that take advantage of the Amazon ecosystem. While we can’t divulge details, we are looking at traditional neural networks with concrete applications. For instance, we are studying hand posture detection using a time-of-flight sensor. Instead of using image recognition, a device like the VL53L5 and its 64 different zones could enable a machine learning algorithm that detects if a thumb is up or down, thus creating a new way to interact with a computer system while decreasing costs since time-of-flight sensors are cheaper and require fewer resources than a camera sensor.

Another example could be human activity detection, such as walking, running, or climbing stairs. Interestingly, we already offer applications that use the machine learning core of our LSM6DSOX sensor. However, in this instance, developers would use the microcontroller to run the neural network, thus giving them an alternative to better tailor their applications to their needs. Ultimately, the application examples are here to help the community explore as many options as possible to make machine learning more accessible and fit more bills of materials. After all, cloud-connected autonomous devices are often seen as the next milestone for IoT applications, and these new examples feed the current edge AI trends in existing connected industries.

Existing industry adoption

The interest from LACROIX also shows why the AWS STM32 ML at the Edge Accelerator is breaking ground in the industry. There’s already research trying to use microphones and machine learning to create better smart city infrastructures. For instance, a paper published in 20201 examines how a neural network and an off-the-shelf microphone could replace a significantly more expensive phonometer to track noise pollution in urban environments. Similarly, a study published in 20222 looks at noise classification in smart cities thus showing how the AWS and ST application solves the challenges currently raised by researchers. Audio capture could also help track vehicular or pedestrian traffic to better monitor a city’s activity.

Acute blog readers will remember that others in the industry already use ST and AWS IoT solutions together. One example is IoTConnect, which Avnet showed at the ST Technology Tour 2023. The company used the same STM32U5 Discovery kit and AWS to create a dashboard that showcased sensor data from the ST board. The AWS STM32 ML at the Edge Accelerator takes things a step further by walking developers through the creation of a machine learning application at the edge, but the idea of combining our technologies with AWS solutions has been gaining ground. Hence, today’s application is more than just a demo but a beacon lighting the way of many in the industry.

- Download AWS STM32 ML at the Edge Accelerator

- Grab the B-U585I-IOT02A

- Sign up for the STM32Cube.AI Developer Cloud

- How AWS, STMicroelectronics, and LACROIX Are Making Cities Smarter and Safer with Edge AI

- Monti L, Vincenzi M, Mirri S, Pau G, Salomoni P. RaveGuard: A Noise Monitoring Platform Using Low-End Microphones and Machine Learning. Sensors (Basel). 2020;20(19):5583. Published 2020 Sep 29. doi:10.3390/s20195583 ↩

- Ali H. A., Rashid R. A., Hamid S. Z. A. A machine learning for environmental noise classification in smart cities. Indonesian Journal of Electrical Engineering and Computer Science. Vol.25, No.3, March 2022. pp. 1777-1786. doi: 10.11591/ijeecs.v25.i3.pp1777-1786 ↩