Using Edge Impulse, it is possible to create intelligent device solutions embedding tiny Machine Learning and DNN models. The Cloud-based solution abstracts the complexity of real-world sensor data collection and storage, data features extraction, ML and DNN models training and conversion to embedded code, and model deployment on STM32 MCU devices. Without local AI framework installation, engineers can generate and export the model into their STM32 projects with a single function call. All generated Neural Networks now fully utilize STM32Cube.AI to ensure that they run as fast and energy efficiently as possible, and firmware can be fully customized using STM32CubeMX.

Deploying machine learning (ML) models on microcontrollers is one of the most exciting developments of the past years, allowing small battery-powered devices to detect complex motion, recognize sounds, classify images or find anomalies in sensor data. To make building and deploying these models accessible to every embedded developer STMicroelectronics and Edge Impulse have been working together to integrate support for STM32CubeMX and STM32Cube.AI to Edge Impulse. Edge Impulse Cloud is now capable of exporting Neural Networks through a local STM32Cube.AI engine to ensure the best possible efficiency into a CMSIS PACK compatible with STM32CubeMX projects. This gives developers an easy way to collect data, build models, and deploy to any STM32 MCU.

Machine Learning for small devices

Machine learning on embedded systems (often called TinyML) has the potential to allow the creation of small devices that can make smart decisions without needing to send data to the Cloud – great from efficiency and privacy perspective. To run deep learning models (based on artificial neural networks) on microcontrollers ST launched STM32Cube.AI. STM32Cube.AI is a software package that can take pre-trained deep learning models, and convert them into highly optimized math C code that can run on STM32 MCUs. Extracting the right features, building a quality dataset, and training the model in order to deploy it on an STM32 are all critical steps to build an ML-based solution.

Machine learning makes it easy

Embedded developers might be naturally skeptical of machine learning. Analysis of sensor data on embedded devices is nothing new. For decades, developers have been using signal processing to extract interesting features from raw data. The result of the signal processing is then interpreted through simple rule-based systems, e.g. a message is sent when the total energy in a signal crosses a threshold. And while these systems work well, it’s hard to detect complex events, as you’d need to plan for every potential state of the system.

Edge Impulse helps visualize features to understand complex datasets

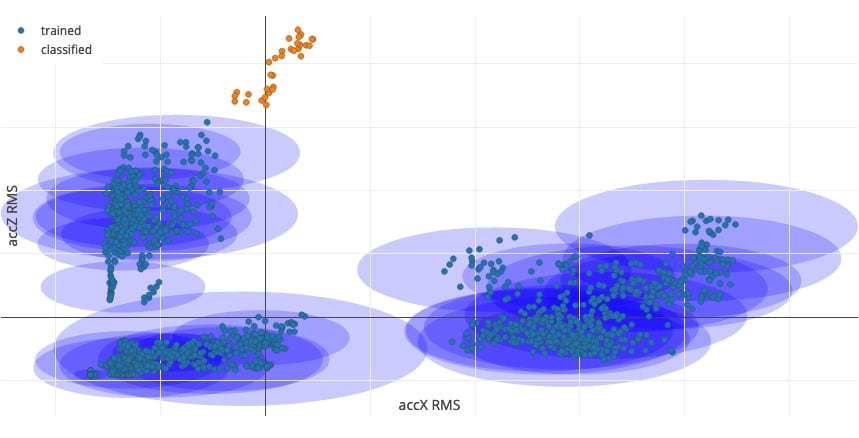

What we can do with machine learning is to find these boundaries and thresholds in a much more fine-grained matter. For example, in anomaly detection you can train a machine learning model (classical or neural network) that looks at all the data in your dataset, cluster these based on the output of a signal processing pipeline (still the same DSP instructions as you’d always), and then compare new data to the clusters. The supervised model learns all the potential variations in your data and creates thresholds that are much more precise and fine-grained than can be built by hand.

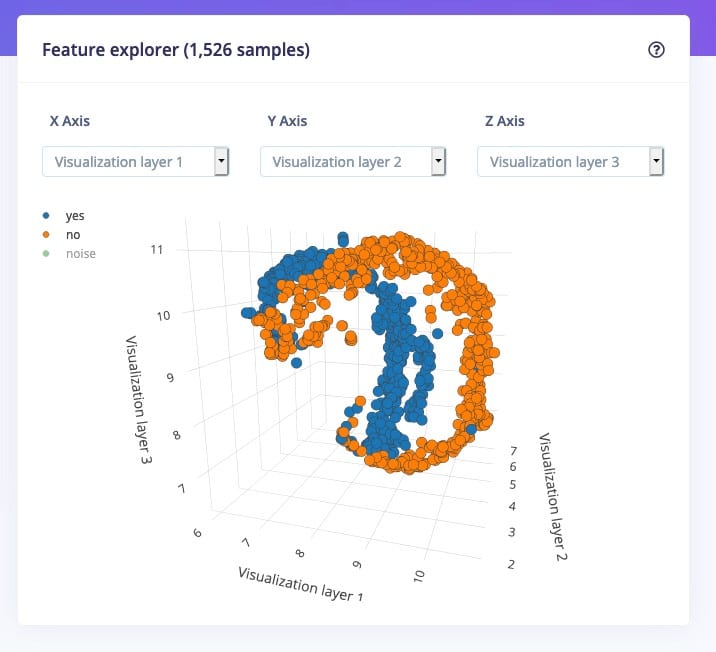

And because these thresholds can be calculated automatically in such a fine-grained matter, it’s possible to detect much more complex events. It’s relatively easy to write code that detects when a microphone picks up sounds above 100dB, but very complex to detect whether a person said ‘yes’ or ‘no’. Machine learning really shines there.

Not a black box

But giving control to a machine learning model can be scary. If you deploy a model in millions of devices, you want to be sure that the model really works and you haven’t missed any edge cases. To help with this, Edge Impulse favors traditional signal processing pipelines paired with small ML models over deep ML ‘black-box type’ models; and it has lots of visual tools to help determine the quality of your dataset, analyze new data against the current model, and quickly test models on real-world devices. The Feature explorer plots extracted features against all the generated windows in 3D graphics, allowing developers to explore the dataset and understand if the features will be easily separable by the model.

Add Neural networks models on top using STM32Cube.AI

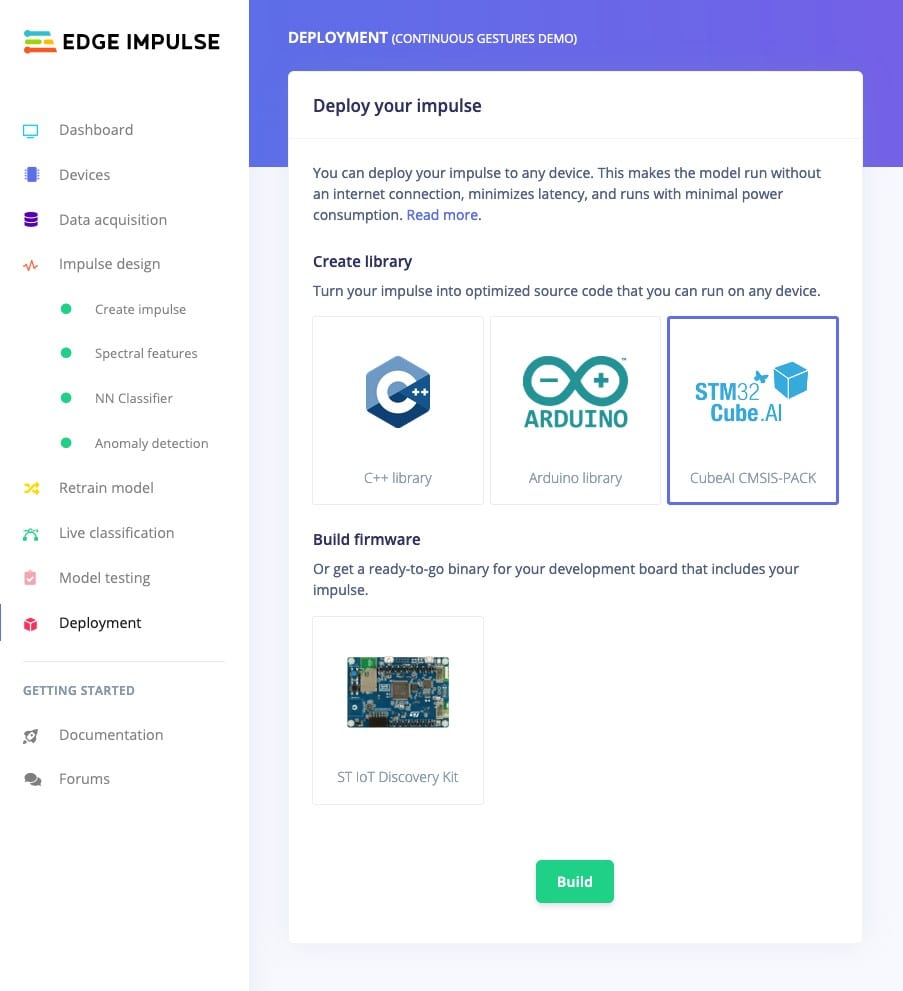

When building neural networks for classification or regressions tasks, for instance, it is critical to optimize models’ footprint and execution time for the target microcontroller. Developers automatically benefit from all STM32Cube.AI optimizations as the tool is automatically called in the Cloud when the STM32Cube.AI CMSIS-PACK export option is selected. STM32Cube.AI performs model quantization and other optimizations that allow compression with minimal performance degradation and produces optimized C code for all STM32 microcontrollers.

STM32Cube.AI CMSIS-PACK’ deployment packages up the entire model, including all signal processing code and machine learning models, and creates a CMSIS-PACK that integrates with STM32CubeIDE. This pack runs on any Cortex-M4F, Cortex-M7, or Cortex-M33 STM32 MCU. To add the CMSIS-PACK to your STM32 project – follow the step by step guide. You can then develop custom firmware for any STM32-based product embedding your machine learning model in the STM32Cube environment.

Getting started

To get started with Edge Impulse and STM32Cube.AI sign up for an Edge Impulse account, order your ST IoT Discovery Kit, and follow our tutorials and instruction guides. You will very quickly have a machine learning model that runs on any STM32-based product.

If you want to learn more about machine learning on embedded systems, want to see a live demo of STM32Cube.AI and Edge Impulse in action, or want to win one of the 20 ST IoT Discovery Kits: sign up for our joint webinar on 21 July.