What do cars from the 1960s and securing an embedded Linux system have in common? In a presentation at the 2015 Linux Security Summit, Konstantin Ryabitsev, a system administrator on the Linux Foundation’s Collaborative Projects IT team, compared cars and IT security. He explained that automobiles were reliable by the end of the previous century. They transported people with minimal risk of malfunction. However, vehicles didn’t account for human error, and drivers had little protection in the event of a crash. Today, carmakers use airbags, safety shutdowns, anti-skating systems, collapsible steering columns, collision detection, anticipatory breaking, and more. Comparatively, IT security today is like cars in the 60s; it’s reliable but doesn’t account for human error.

Ryabitsev’s presentation looks at securing Linux servers. Hence, while the details of his talk seldom apply to embedded systems, the principles are relevant. Too many companies still overlook significant security aspects of their design. Engineers work on quality and safety but fail to develop contingency plans for security failures. Some teams even relegate security to an afterthought because they don’t value their data. As a result, many fail to see how human errors or new attack vectors could harm users or severely disrupt businesses. We thus released a white paper so embedded systems with an STM32MP1 and an STM32MP2 can look a lot more like cars today: safe, even when things go wrong.

Table of Contents

Classification of attacks

Physical attacks

In his textbook on the Design Principles for Embedded Systems1, KCS Murti classifies security attacks into two main categories: physical and logical. Physical assaults may be intrusive, such as hackers accessing the silicon or interrupting typical operations. Physical attacks may also be “non-invasive”, the most common being side-channel attacks. Hackers may, in such instances, use the clock and memory or derive certain functions from power consumption patterns. ST has been raising awareness about these attacks for a while. Consequently, the STM32MP1 and STM32MP2 offer important protections against both types of physical attacks, and the risk of criminals physically breaking into devices remains low.

Logical attacks

When companies talk about security in embedded systems, they traditionally refer to measures guarding against logical attacks. In a nutshell, a logical attack aims to gain access to data or unlawfully obtain privileges to run malicious software that will reveal even more data or allow hackers to disrupt the system and others connected to it. When popular media cover security topics, they mostly talk about logical attacks. They are the most common due to their relative ease of implementation and low cost as they traditionally exploit a bug or a vulnerability.

Logical attacks may also target hardware features or security systems. For instance, the IT world shook when Spectre and Meltdown first came to light. These two vulnerabilities allowed programs to get sensitive information stored in memory or bypass security safeguards. According to Arm, Cortex-M4 processors and the Cortex-A7 of the STM32MP1 and the Cortex-M33 and Cortex-A35 of the STM32MP2 aren’t subject to these issues. Similarly, Heartbleed was a flaw in the OpenSSL library that allowed hackers to steal information supposedly protected. Developers had to rapidly check their implementations and patch their systems or risk significant repercussions. OpenSTLinux uses a version of OpenSSL that doesn’t suffer from this vulnerability.

Human errors

The use cases above are object lessons because they were so unexpected. Developers must anticipate the unpredictable, plan for thorough audits, and guarantee the rapid deployment of patches. It’s the reason why the ST white paper goes over major concepts and helps managers understand the fundamentals. Knowledge is also critical because, in most cases, hackers don’t even need to exploit an outrageous vulnerability. Most attacks today rely on human error or social engineering. It’s easy to leave a debug port open, erroneously implement a cryptographic operation, or have the administrative password compromised. In IT security, as in medicine, when hearing hoofbeats, expect horses, not zebras.

Security on embedded systems is also more robust thanks to the ecosystem available to developers. ST upstreams its drivers and works closely with the open-source community. We can thus patch issues rapidly and use standard tools to make security more accessible. We also help customers implement critical security features with initiatives like STM32Trust or the ST Partner Program. For instance, the OpenSTLinux distribution gives the tools necessary to implement secure boots and the foundations to support secure firmware updates. However, those wishing to outsource such tasks can count on ST partners like Witekio and its FullMetalUpdate for reliability and efficiency. Ultimately, the white paper assures decision-makers and industry experts that they aren’t alone in this fight.

1. Create, manage, and promote trust

What is trust?

To make IT more reliable, especially when dealing with human errors, companies must create a chain of trusted devices and systems. Users can’t have the assurance of security if the application layer isn’t secure. Identically, developers can’t create a secure app if the operating system they rely on is not trustworthy. Hence, IT security depends on the notion of trust. According to Murti, trust is the assurance that a hardware or software component will “enforce a specified security policy.”2 For instance, an operating system must guarantee that regular users will only have limited privileges and not obtain administrative rights. Similarly, an application must not access resources outside of those defined by its security policies.

What Is a chain of trust (CoT)?

An axiom of IT security is that an upper layer cannot truly be secured if the lower layer it depends on is not trusted. For example, an application isn’t secure if the OS it relies on isn’t trusted. Indeed, even if the software encrypts all the data it collects, the fact that the OS isn’t trustworthy means the user has no guarantee that a hacker didn’t compromise the system or steal the data, the encryption key, or more. Similarly, a trusted OS isn’t truly secure if the hardware it runs on cannot be trusted. Hence, a truly secure embedded system relies on cascading checks of integrity that the industry calls the chain of trust (CoT).

In the classical sense, a CoT represents a workflow of trusted layers from the lowest level of the hardware up to the application. However, managers must understand that a true chain of trust extends beyond the system itself. Developers must protect their work, have guidelines to reduce risk, and anticipate unfortunate events. What good is an encryption key if a disgruntled employee steals it? Similarly, companies must ensure that the firmware they ship to their OEM is secure. Companies that work hard on securing their programs must have the assurance that a malicious actor isn’t accessing their source code. True IT security means trying to plan for all sorts of human behaviors.

This is why the industry adopted cryptographic standards that use mathematically tractable algorithms. These systems assume attackers are familiar with them. Instead of relying on a developer to obscure access, experts now expect hackers to know what they are doing, but breaking the algorithm is simply too expensive and computationally intensive to be realistic. It explains, among other things, why so many companies choose standards like RSA, AES, or new post-quantum cryptographic algorithms like CRYSTALS-Dilithium. Attackers know them well but can’t break them if they are correctly implemented. And with hardware IPs accelerating these standards, it’s possible to run them without any performance penalty.

What is a root of trust (RoT)?

As the UEFI Forum explains, the chain of trust on a computing system starts with a hardware root of trust. The latter is a mechanism that guarantees the low-level part of the system is trusted because the code it uses to boot passes various verifications and certifications. In a nutshell, a root-of-trust offers a few guarantees. It ensures that no one enabled a new debug port or changed the firmware, among other things. It often uses an immutable root key to verify the code’s integrity and a secure boot loader. The fact that the key is in the hardware also prevents hackers from cloning the system.

Given how important the firmware is in establishing a root of trust, many are starting to use encrypted firmware. Put simply, the code loaded in the MCU or MPU is unreadable. The host device must first securely decrypt it internally, meaning that no human eye can peek into the decrypted code and then run checks to ensure its integrity. Microprocessors like the STM32MP13 and the STM32MP25 have specific memory protection mechanisms and capabilities to guarantee robust performance despite the added decryption step. It also ensures that industrial spies can’t access the source code.

What are Secure Boot, Secure Firmware Install, Secure Module Install?

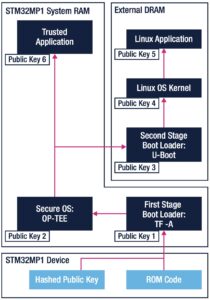

It can be hard for engineers and managers to know where to start. Hence, the white paper lays out the major building blocks needed to create a reliable chain of trust. For instance, it explains how the STM32MP1 and STM32MP2 environments come with a reference boot sequence that relies on root-of-trust. ST uses TF-A to start securely, OP-TEE as a secure OS, and then U-Boot as a second stage boot loader during initialization of the Linux kernel in the external RAM. We also have a wiki that helps developers implement all these elements. The white paper is thus a launching pad for project managers.

ST also offers secure firmware installation mechanisms, such as Secure Secret Provisioning. Developers can encrypt their keys on a hardware secure module (HSM). The OEM loads the encrypted data and secret information, and the MPU decrypts them internally using the HSM. As a result, no one can run an unprotected bootloader or access sensitive data, and customers can track the number of devices flashed by the OEM. It is thus critical to guarantee a chain of trust that goes beyond a bootloader or software layer but represents a comprehensive approach to product design and manufacturing.

2. Detect, anticipate, and respond to incidents

Why audit logs, gateway, and code?

The world of embedded systems can no longer ignore the best practices established by IT security in other domains. A few years ago, companies rarely audited their embedded systems because they didn’t see the value in it. In many instances, data remained local, and there was no sensitive information. However, the next automation age makes embedded systems more connected and smarter. Hence, it is critical for companies to audit their logs, periodically check software implementations, and monitor traffic for intrusions. Like cars today, developers can only protect users from a crash if they know there’s been one or there’s a dangerous situation in progress. Hence, engineers must stay abreast of the latest security trends3.

Another new trend is the rise of security certification. Regulators and other organizations are creating certifications that guarantee the robustness of security implementations. For example, when we say that the STM32MP1 and STM32MP2 can help pass a SESIP Level 3 certification, it means that their true random number generator has been tested against NIST SP 800-90B recommendation, among many other things. Consequently, managers who need to obtain a FIPS 140-3 certification can reuse our SP 800-90B certificate and release their product to market faster. Similarly, the pre-certifications we obtained for the STM32MP135 and STM32MP25 signify that obtaining the rigorous POS PCI-PTS certification required for banking applications is significantly easier.

What is a contingency plan and FUOTA?

Moreover, companies must create contingency plans to respond rapidly after detecting a vulnerability or an intrusion. Having an emergency response in place can save precious time and vastly help decision-making. It also avoids making massive decisions during a fraught and stressful time. Teams can draw inspiration from various resources online. For instance, the Cybersecurity Incident & Vulnerability Response Playbooks from the American Cybersecurity & Infrastructure Security Agency is an excellent place to start. The document advocates creating two playbooks: one dealing with incidents and the other for vulnerabilities. Not every aspect of the document will apply to embedded systems or private companies, but it’s a good starting point.

It is also critical to implement firmware update over-the-air (FUOTA) mechanisms. Patching a system remotely is essential in a crisis. However, FUOTA is particularly complex to implement. In a recent article published in the journal Internet of Things4, researchers in France looked at the challenges behind FUOTA, from resource constraints to network topologies, device management, security, and more. There are a lot of components that must come together on the embedded system and the remote server. In many instances, FUOTA is more accessible than companies think, and for most, outsourcing is the cost-effective option.

3. Invest in, contribute to, and depend on the open-source community and proven partners

Adopting an open-source approach

There’s a clear shift toward the open-source community. With the launch of the STM32MP1, ST promised to keep upstreaming its drivers and work on OpenSTLinux. Beyond the advantages we already talked about, working with open-source software simplifies workflows. Using open and popular tools means it is easier to hire people and transfer projects to a new team if need be. In many instances, there’s more support and knowledge around open software than proprietary solutions. Being part of a community also tends to bolster morale. Engineers will be more likely to tackle a project knowing it serves a larger purpose. For instance, upstreaming patches is highly rewarding, knowing many in the industry will use them.

Some companies are reluctant to adopt open-source technologies due to licensing issues. There are some misconceptions around proprietary applications that run on Linux and use tools under General Public License (GPL). However, it’s the opposite. Software like TF-A, OP-TEE, and U-Boot allow companies to build their commercial solutions on top of their systems without fear of legal repercussions. A 2004 paper from Wharton explained that it was possible to make money on open-source platforms and that future licenses would help clarify issues. The ST white paper thus helps managers understand fundamental concepts around licensing so they navigate this issue better and start their project with the right mindset.

The need for greater collaboration

In an executive order signed in May 2021 and entitled “Improving the Nation’s Cybersecurity”, even the White House recommends greater collaboration between service providers when it comes to IT security. The industry is entering a new area where requirements on embedded systems are far more stringent. Data is increasingly precious, even consumer systems are mission-critical, and protecting information is no longer optional. By adopting the lessons outlined in the white paper and collaborating with the open-source community and members of the ST Partner Program, a company can transform its culture. Security doesn’t have to be an afterthought. It can lead a company to create robust embedded systems that thrive, even when facing security challenges.

- Murti K. (2022) Design Principles for Embedded Systems. p. 424. Transactions on Computer Systems and Networks. Springer, Singapore. https://doi.org/10.1007/978-981-16-3293-8 ↩︎

- Murti K. (2022). Idem. p. 421 ↩︎

- Rachit, Bhatt, S. & Ragiri, P.R. Security trends in Internet of Things: a survey. SN Appl. Sci. 3, 121 (2021). https://doi.org/10.1007/s42452-021-04156-9 ↩︎

- Saad El Jaouhari, Eric Bouvet. Secure firmware Over-The-Air updates for IoT: Survey, challenges, and discussions. Internet of Things. Volume 18. 2022. https://doi.org/10.1016/j.iot.2022.100508. ↩︎