Check out our live coverage of XR Day, learn more about the presentations and check out the demo in real time.

On March 3, the University of Washington will hold its XR Day, and ST is sponsoring the event. As its name indicates, the conference will focus on virtual (VR), augmented (AR), and mixed (MR) realities, their hardware and software ecosystems, the accomplishments and the challenges of the industry, as well as the deep ethical and philosophical questions that crop up as these technologies, become ever more popular. It’s a fascinating time to hold such an event because the industry seems to be at a crossroads. IDC recently foresaw worldwide spending on AR and VR, reaching $18.8 billion in 2020, which, if it comes to fruition, would be an increase of 78.5% compared to 2019. And yet, popular media is wondering where these headsets are going and if any will finally reach critical mass.

XR Day: Bringing Clarity to Confusing and Challenging Definitions

The industry seems to be still struggling with the very meaning of these terms, which appears to reflect the uncertainties that plague these technologies. While people tend to agree that VR is about entirely replacing the real world and its sensory experience with computer-generated alternatives, the degree of immersion for a truly virtual experience is up for debate. Is visual and auditory information enough, or does an authentic VR experience also require tactile sensations?

Similarly, the other terms don’t always mean the same thing to all people. “Augmented reality” most often refers to the superposition of virtual elements onto an optical device that lets the real world through, such as smart glasses, yet some companies don’t hesitate to use the term “AR” to refer to products that only produce sound. And mixed reality seems to sit between AR and VR to encompass devices that capture the real world through cameras, project it using a video, then add virtual elements on top of it. However, can we call it the “real world” if it’s only a reproduction coming from a CMOS sensor?

No other term showcases these difficulties better than “XR” itself. Some1 use “XR” as a substitute for “mixed reality,” while others2 believe it to be its subset because refer to MR devices that offer a virtual environment shared within an online community. On the other hand, some like Charles Wyckoff, who first coined the term “XR” in a patent filing in 1961, seem to embrace it as a catchall phrase for anything between the real world and VR3. Even the term itself appears to be up for debate with some simply calling it “XR,” while others use it as an initialism for cross or extended reality.

History of VR, AR, MR, And the New Hopes from AR Clouds and Applications

The lack of clear definitions may stem from the tumultuous history of these technologies. The idea of a virtual reality is as old as Ancient Greece itself and the myth of Zeuxis, a painter with such an extraordinary talent that he drew grapes so realistically the legend says birds tried to eat them. And it’s the French playwright Antonin Artaud that seemed to first use the term “virtual reality” in 1938, in a context unrelated to technology. The first modern AR/VR products were military in nature and date back to the 1950s, but as Dr. Bernard Kress explains in his new book Optical Architectures for Augmented-, Virtual-, and Mixed-Reality Headsets, the first true AR/VR expansion took place in the early 1990s. However, it failed to grab the masses and died down only to resurface today in what he calls the “second AR/VR boom.”

The reasons for the absence of widespread adoption, even during this renaissance period, remain difficult to pinpoint. From the lack of maturity of sensors, display solutions, and applications, to the little ROI on AR, VR, and MR products, there’s only one surety: there are still more failures than success stories. However, both Dr. Kress and the industry at large seem to remain optimistic. AR clouds, which are real-time 3D content that users can share and propagate, seem to point to the “killer application” that appears to be missing. Additionally, the appearance of new use cases that would see XR as a replacement for traditional tablets or computers could potentially make AR, VR, and MR a lot more meaningful to consumers. One thing is for sure: the industry is crying out to know where we’re going and how we’ll get there, and XR Day can potentially offer some answers.

XR Day: Presentations From Leaders, Pioneers, and Experts

The panel of industry experts and scholars that will offer presentations at the event will surely help attendees grasp how to look at AR, VR, and MR today and how to understand the present and future impact of these technologies. Dr. Bernard Kress, Partner Optical Architect at Microsoft / Hololens, will join XR Day on the heels of a successful SPIE AR VR MR conference that took place less than a month ago, and the release of his new book that tackles, among other things, the optical challenges inherent to these technologies. He is most well known for his work on optical systems for consumer electric such as Google Glass in 2010 and the Microsoft HoloLens projects since 2015.

XR Day will also welcome Chelsea Klukas, Product Design Manager at Oculus, Vinay Narayan, Vice President, Platform Strategy & Developer Community at HTC Vive, and Stefan Alexander, VP Advanced R&D at North, and the man we interviewed in preparation for this event. He talked to us about Focals, his company’s smart glasses, and the lessons it can teach the industry. We will also meet Dr. Evie Powell, Virtual Reality Engineer at Proprio Vision as well as President/CEO of Verge of Brilliance, LLC, and Dr. Walterio Mayol-Cuevas, Professor at the University of Bristol. We thus have an eclectic panel of industry leaders, pioneers, visionaries, and intellectuals to address the tough questions, to share their experience, and to offer concrete solutions or ideas to meet the challenges that may be holding these technologies back.

XR Day: Demos with MEMS Micromirrors and Laser Beam Scanning

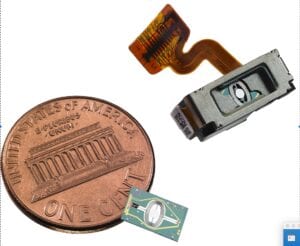

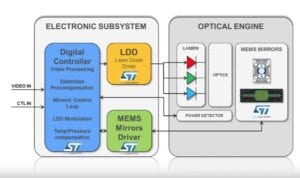

Attendees will have access to demos that will showcase technologies from some of the partners exhibiting at the conference. We will also hold joint presentations with some of our collaborators to showcase our MEMS Micromirrors chipset for Laser Beam Scanning (LBS) solutions. These components are at the center of augmented reality as they enable the projection of an image onto optics that still let the real world through. Our job is to work with companies and assist them as they figure out the right technical specifications. We also provide the electronic subsystem that controls the laser diode and drives the MEMS mirrors present in the optical engine, so they can focus on making applications and features that will distinguish them from the rest of the industry.

XR Day: Demos with Microcontrollers, Microprocessors, and Sensors

They will also get to see how ST contributes to this industry. For instance, we will run a facial recognition software using an STM32H7 development platform to show how artificial intelligence, thanks to STM32Cube.AI, can thrive on embedded systems. Similarly, we will showcase an image recognition program that uses our STM32MP1 microprocessor to explain that creating complex products with our MPU doesn’t have to be hard if you follow our ten commandments. Both of these demonstrations establish the computational capabilities of our platforms to help students and leaders see what they can accomplish without necessarily tethering a headwear to a computer. Our components also come with cost-effective development boards and free tools to make these technologies more accessible.

ST will also present two demos that make use of our SensorTile.box, our newest sensor platforms that target enthusiasts, professionals, and experts. The kit contains an inertial sensor with machine learning capabilities, as well as a high-resolution three-axis accelerometer, a barometer, a hygrometer, a thermometer, a magnetometer, and a Bluetooth LE module to send all that information to a host system. Sensors are at the heart of successful AR, VR, and MR products, and understanding how to use them best is crucial. Our demos will show how the SensorTile.box can excel in a contextual awareness scenario using artificial intelligence while another application will demonstrate gesture recognition. Engineers, students, and decision-makers will thus have a better grasp of how they can employ these same technologies to offer more immersive and faithful experiences.

XR Day: Breakout Sessions to Challenge Technical and Existential Assumptions

Finally, XR Day will explore numerous technical and existential issues surrounding AR, VR, and MR in breakout sessions. For instance, Elin Björling, from the University of Washington, will look at how the potential threats, ethical challenges, and misuses that may arise as these platforms get more popular. Lisa Castaneda, from foundry 10 will walk attendees through the use of VR in schools and the inherent problems that it may entail, while Amy Lou Abernethy, from AMP Creative, will explain how these tools can help build empathy and understanding. XR is unique because unlike other technologies that tend to raise similar issues after they reach critical mass, the public started struggling with these questions early in the second AR/VR boom, and it seems that the industry must find answers before it can genuinely reach mass adoption.

- Joseph A Paradiso and James A Landay. 2009. Guest editors’ introduction: Cross-reality environments. IEEE Pervasive Computing 8, 3 (2009)Beth Coleman. 2009. Using sensor inputs to affect virtual and real environments. IEEE Pervasive Computing 8, 3 (2009) ↩

- J. A. Paradiso and J. A. Landay, “Guest Editors’ Introduction: Cross-Reality Environments,” in IEEE Pervasive Computing, vol. 8, no. 3, pp. 14-15, July-Sept. 2009.doi: 10.1109/MPRV.2009.47 ↩

- For a more thorough exposition of the various definitions, see: Mann, Steve & Furness, Tom & Yuan, Yu & Iorio, Jay & Wang, Zixin. (2018). “All Reality: Virtual, Augmented, Mixed (X), Mediated (X,Y), and Multimediated Reality”. arXiv:1804.08386 ↩︎