For many industrial applications, computer vision is a key source of data, and these techniques have evolved greatly in the last few years. In particular, the invention of deep neural networks has revolutionized recognizing objects and their position in space. Integrating those techniques in embedded systems has been a challenge as there are a variety of tools that can be used to implement neural network processing, and they need to be efficiently integrated with the other parts of the overall media pipeline in the embedded system while ensuring tradition performance techniques like zero-copy buffer handling is done correctly.

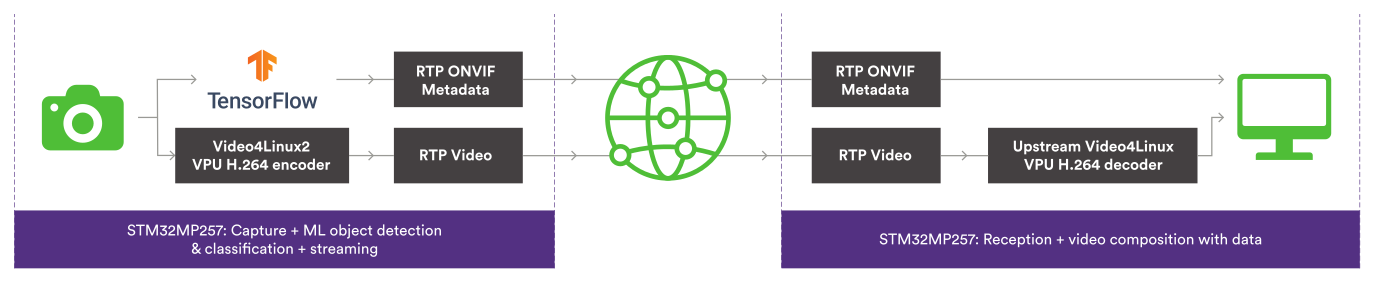

Collabora, a member of the ST Partner Program, partnered with STMicroelectronics recently to present a typical vision pipeline running on the STM32MP2. First capturing images on the camera, these are then run through the SSD MobileNet neural network to recognize objects present in the video, see if they are animals and finally display those findings. As an added twist, the pipeline was split into two systems, sending the H.264 compressed video along with the list of animals and their position to a second system which handles the drawing of boxes and displaying the combined video.

Integrating neural network inference into GStreamer

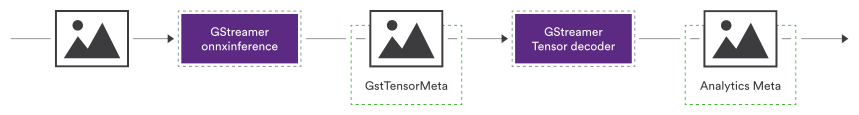

As part of the inference process, the images coming from a camera generally undergo three stages: pre-processing, inference and post-processing. The pre-processing stage means transforming the image into the exact format that the neural network expects with steps such as scaling the image, changing the color format, or normalizing the values. This step corresponds to traditional video manipulation functionality that is already present in multimedia frameworks such as GStreamer. The second step is the inference itself, this is generally handled by a neural network engine, embedded systems typical ones are ONNX Runtime or TensorFlow Lite. This step can be greatly accelerated by using the Neural processing unit accelerator present in the platform. The last step is post-processing, as neural network output tensors of floating-point numbers that then need to be interpreted for the system to give meaningful results.

GStreamer is the ideal framework to handle this kind of processing that happens in multiple stages, it can also handle the other parts of the pipeline such as video compression, streaming and display, making it possible to implement the entire video processing system as a GStreamer graph. The pre-processing stage can normally be handled by existing GStreamer elements such as `videoconvertscale` that can scale the video and convert it into the desired color format in one step, using the NEON acceleration present in the ARM Cortex-A35 cores.

On our STM32MP2 platform, the inference step is achieved using the TensorFlow Lite inference engine to take advantage of the new Neural processing unit accelerator. A `tfliteinference` GStreamer element was created by Collabora to integrate the engine into the GStreamer pipeline. This simple element loads the Tensorflow Lite network and applies it to each frame. The GStreamer element then attaches the resulting tensors to the original frame for further post-processing. The inference step could have been easily replaced using the ONNX Runtime framwork to replace the `tfliteinference` element with the `onnxinference` one without modifying the rest of the pipeline.

Finally, the last step is post-processing, this requires running code that understands the tensors output of the model and gives them meaning. This step is specific to the model, but independent from the inference engine used. In this example, the `ssdobjectdetector` GStreamer element that is specific to the SSD MobileNet object detector model was used, this element is referred to as a tensor decoder. This element reads the four tensors generated by the model, and extracts the position, size, and class of each object. It then attaches this information to the video frame as explicit metadata. A different model would have used a different tensor decoder, but the rest of the pipeline could have been kept unmodified.

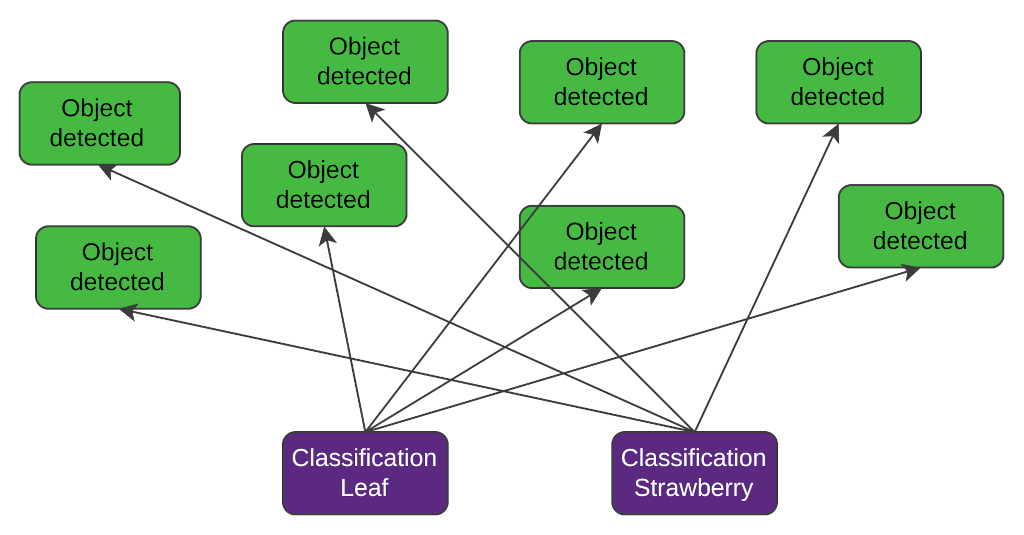

Letting analytics metadata flow down the GStreamer pipeline

Once an analysis has been performed on an image, it can produce a large amount of metadata. It’s not uncommon for hundreds of objects to be found in a single video frame. The desired effect was to have an efficient way to convey this information to downstream elements. For this, the new GstAnalyticsRelationMeta is the perfect fit. Introduced by GStreamer 1.24, this single meta contains an array of metadata structures, but, more importantly, it also specifies their relationship. In this case, there is a type of structure describing the type of object (“Is it an animal? A strawberry? A leaf?”), and then a second one containing the position and size of the object found. Those are then related through an adjacency matrix, making it possible to efficiently answers question such as “list all animals” or “which class is this object”.

Once this metadata is standardized, elements are then able to perform analysis or transformations of this metadata independently on how it was produced. In the current example, the ONVIF Metadata format is being used, which is a standard coming from the security camera community. A set of two GStreamer elements was implemented by Collabora to convert from the GStreamer analytics meta into the ONVIF XML metadata format and vice-versa. These elements are implemented in the Rust programming language because the ONVIF XML is meant to be streamed over the network and using the safety advantages of Rust is ideal when dealing with untrusted data. Finally, there is one last element that takes this metadata and draws boxes on the screen, synchronized to the original frame.

Video transmission

The other half of this example is a standard RTSP video transmission. Taking the video frames, encoding them into H.264 accelerated by the hardware video encoder present on our STM32MP2 and then streamed using the GStreamer RTSP Server along with the analytics XML. The receiver then used the `rtspsrc` GStreamer element to connect to the server, receives the H.264 encoded video with the XML. It then uses the STM32MP2 hardware video decoder to efficiently decode the video and make it ready to recombine it with the metadata.

Collabora joined STMicroelectronics at SIDO in Lyon in September 2024, to showcase this innovative work. Below is a video interview with Olivier Crête, Multimedia Lead at Collabora, demonstrating how Open Source software such as GStreamer can help build innovative products with the new STM32MP2 platform.

Same AI goodness, this time with TSN/AVB

At Embedded World in Nuremberg, Collabora demonstrated an upgraded version on this work, replacing RTSP and ONVIF with AVB for streaming. Using the power of Time Synchronized Networking, the updated demo can apply the AI model to the images captures from the camera using the GStreamer Analytics framework, and sends them over the network with very precise timing information. This enables the receiver to get the precise moment when an object was detected and react appropriately.

The other feature of AVB that was employed is network stream reservation, making it possible to allocate a specific bandwidth to the video stream and the metadata stream separately, ensuring that they arrive on time even when competing with unprivileged network traffic. This makes it easier to fully utilize the network while keeping the high quality and low latency streaming. Since the ONVIF specification is specific to RTSP, to send the metadata over AVB, Collabora created a custom AVB format to send the result analysis to the peer.