We are live at XR Day, the conference that focuses on Virtual, Augmented, Mixed, and Extended realities. We invite you to read our event blog post to learn more about the current challenges and innovations that led us to sponsor this event at the University of Washington.

9.00 AM

As people arrived at XR Day and demos started to boot, attendees gravitated toward some of the ST presentations. On one table, people could see the STM32H7 development board STM32H747I-DISCO board using a camera module not only to recognize faces but use machine learning to determine a person’s feelings. As the pictures above show, the system was able to determine if a person was happy, disgusted, sad, etc. Thinking of AR, VR, and MR, a system capable of capturing the user’s response to specific visual cues or interfaces could be invaluable, but beyond the demo itself, it shows that it’s possible to run pertinent neural networks on microcontrollers.

Another demo uses a consumer-grade webcam connected to the USB port of an STM32MP157C-DK2 Discovery Kit to capture a series of pictures in fast succession while the MPU uses a neural network to identify what they are and offer various degrees of certainty. These demos not only show the power behind our microcontrollers and microprocessors but the efficiency that is available thanks to X-CUBE packages such as STM32Cube.AI, which converts a neural network into an optimized code for STM32. The demos were a great way to help attendees at the XR Day understand that there’s an untapped potential from embedded systems that are often a lot smarter than we sometimes give them credit for.

9.30 AM

Julie Kientz, Professor and Chair of the Human-Centered Design & Engineering at the University of Washington, launched the event and explained her personal and professional experiences with XR. Bharath Rajagopalan, Director of Strategic Market Development at ST, then took the stage to introduce who we were, but most importantly, our dedication to research and our devotion to XR. By looking at products already shaping the industry, he explained the place of sensors, computation, optics, and how they need to come together to create a meaningful user experience. Ultimately, he conveyed that XR Day is about bringing people within the industry to make AR, VR, and MR genuinely impactful.

9:45 AM

Vinant Narayan, Vice President, Platform Strategy & Developer Community at HTC Vive, took the stage to share the capabilities behind VR that are already changing the industry. It’s easy to think of future applications, but he clearly showed that some companies are benefiting from it already. For instance, engineers designing a helicopter were using VR headsets to conceive new parts. Instead of manufacturing multiple versions, they could virtually draw their schematics, visualize them, and significantly reduce their time to market.

10:15 AM

Dr. Bernard Kress, Partner Optical Architect at Microsoft / Hololens, explored what makes a good AR headset through the eyes of “human-centric optical design.” His technical and in-depth study of the laws that govern optics and human vision helped attendees look at the considerations that engineers must deal with when coming up with their design as well as some of the systems that are currently shaping the industry. It was a very thought-provoking talk that challenged participants to keep improving the user experience to make it genuinely seamless and “human-centric.”

11:05 AM

Dr. Evie Powell, Virtual Reality Engineer at Proprio Vision as well as President/CEO of Verge of Brilliance, LLC, made the audience smile when she compared AR to the famous magic school bus cartoons. However, what she showed truly made us dream when she revealed her work with healthcare professionals and how AR is revolutionizing the relationship between doctors and patients by transforming specific procedures. It was thus very motivating to see that these technologies are already making a significant positive impact on people’s lives, thus proving that there’s such untapped potential.

11:35 AM

Dr. Walterio Mayol-Cuevas, Professor at the University of Bristol, talked about the state of innovations and, among other things, the content that we bring to XR devices. For instance, he explored the examples of skill-sharing and ranking content based on the qualification of the person on screen. It’s an important topic because as much as we talk about the hardware, what we bring to users is as crucial in the race toward critical mass. This is another example of how machine learning can inform XR to move the industry to a more meaningful experience.

12:10 PM

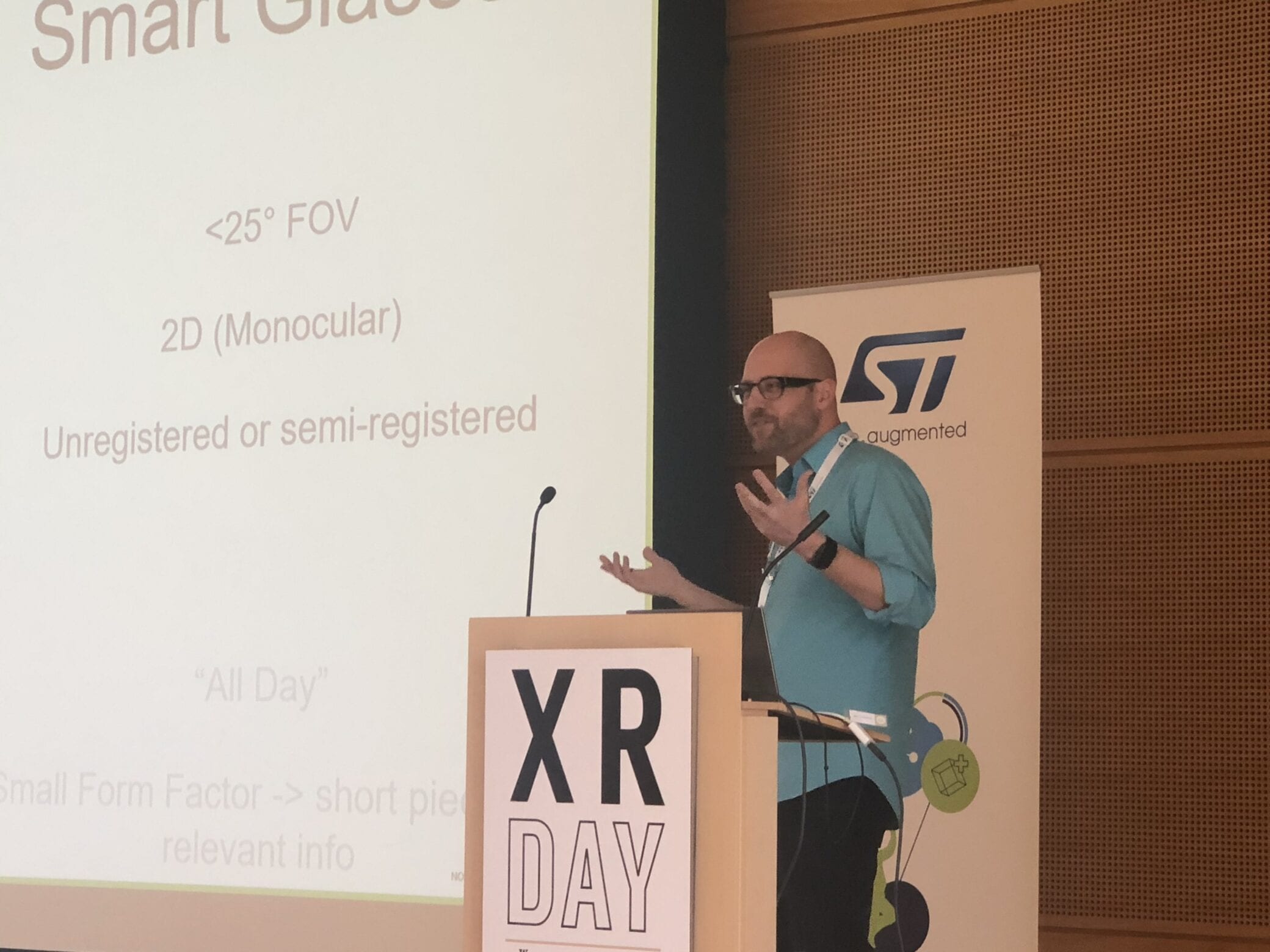

Stefan Alexander, VP Advanced R&D at North, that we interviewed in preparation for this event, who gave a great talk that brought all the morning sessions together, by looking at both the hardware and software. He explained where we are now technologically and what we can expect in the next five years, as well as the various use cases that drive the industry today and what we are hoping to accomplish. He outlined the challenges, the many benefits leaders are hoping to bring to users.

1.00 PM

Mega 1, a Taiwanese company, is presenting an exciting demo that showcases a laser beam scanning and lightguide solution that uses ST’s micromirrors and LBS solutions. The product on display serves to show, in practical terms, how a company can take the Mega 1 module and integrate it into a smart glasses solution. Their solution includes everything from the MEMS to the lens, and its specifications are impressive. The solution has a field of view of 35º, a resolution of 1024 x 600, and a brightness of 1600 nits. From our testing at the events, we were pleased to experience an image that was crisp and convincing. The company is also touting the next generation of their module with an even greater field of view, and that will be available soon in the coming months.

1.30 PM

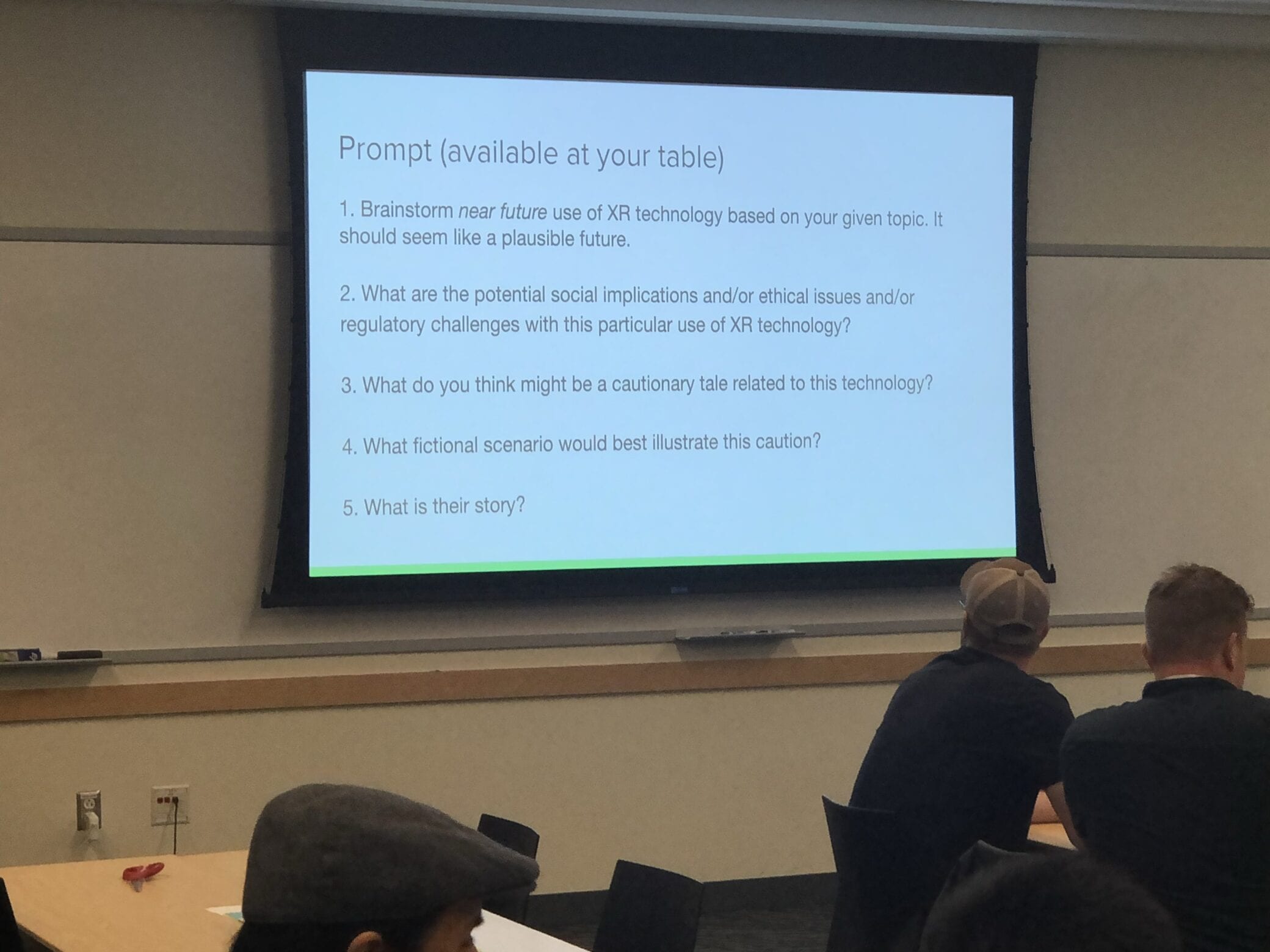

“Designing for Evil!” is the much-anticipated breakout sessions is currently exploring the ethics of XR by asking participants to delve into the social implications of this particular technology. It is also asking for people to share cautionary takes and envision scenarios that would best illustrate the risks and dilemmas that may arise from the use of VR, AR, and MR. Led by Elin Björling of the University of Washington, attendees are first discussing in small groups before sharing their findings and opinions with the room. It’s a genuinely interactive and meaningful approach to some of the most challenging aspects that society must wrestle with as these innovations become more prevalent.

3.00 PM

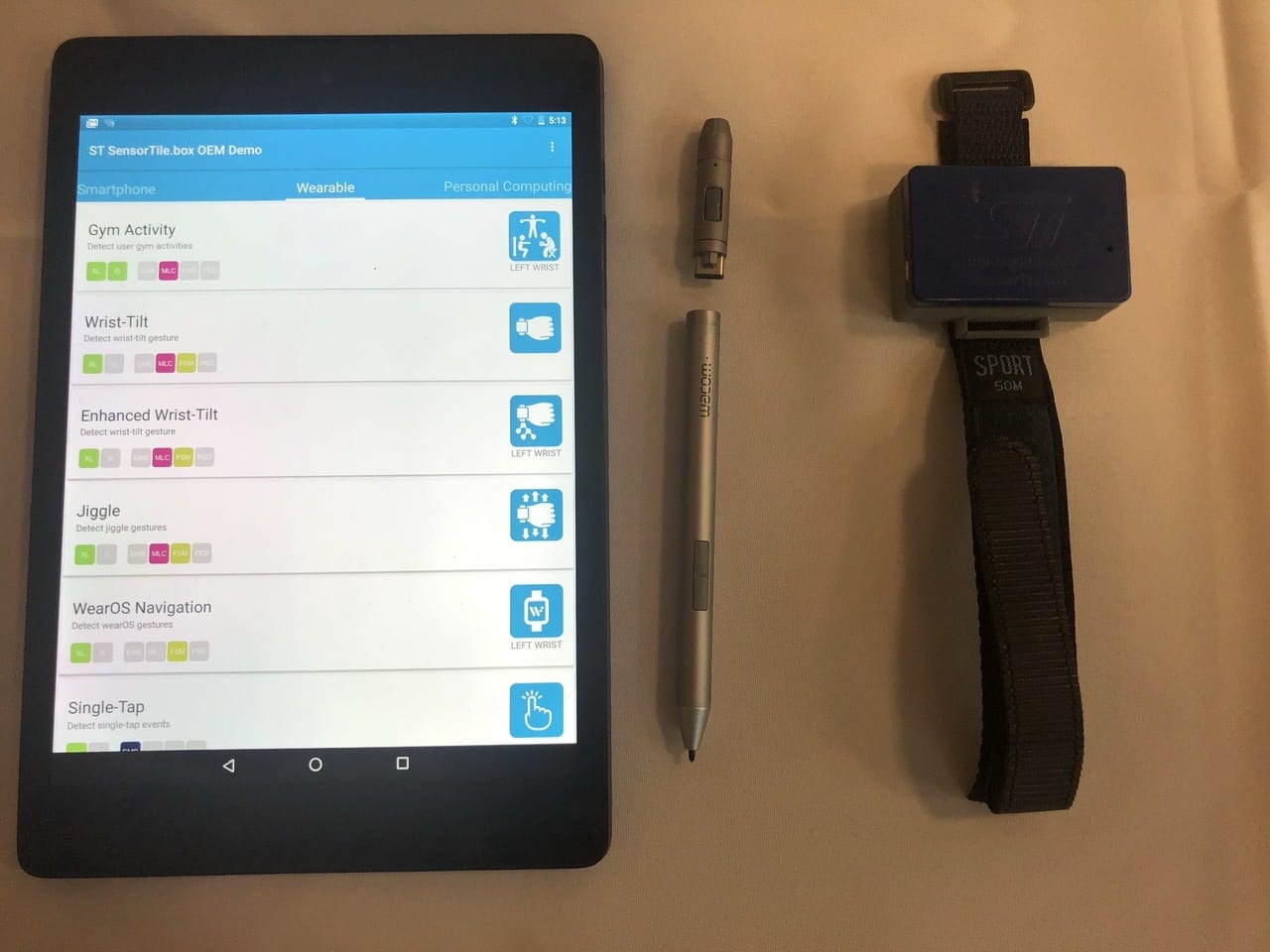

The first batch of breakout sessions is now over attendees are looking at all the demos on the show floor, such as these two presentations from ST. In the middle of the picture, we can see a pen with a little cap on top. In this tiny cap, one of our partners managed to put inertial sensors and an STM32L4 that runs a machine learning application thanks to STM32Cube.AI, which classifies gestures. This tiny accessory enables users to draw or launch various commands. For instance, in our demo, moving the wrist in a circular motion changed the color of a drawing.

Similarly, the box on the right side of the picture is the SensorTile.box, which also runs its machine learning core, but it does so directly onto the inertial sensor: the LSM6DSOX. The system can classify certain activities or detect various movements without involving the microcontroller, thus enabling new levels of power savings and showcasing that there’s a lot more the industry can do with the sensors we use in VR, AR, and MR. Attendees looking to replicate these demos can start with our development platforms and our Function packs.

3.30 PM

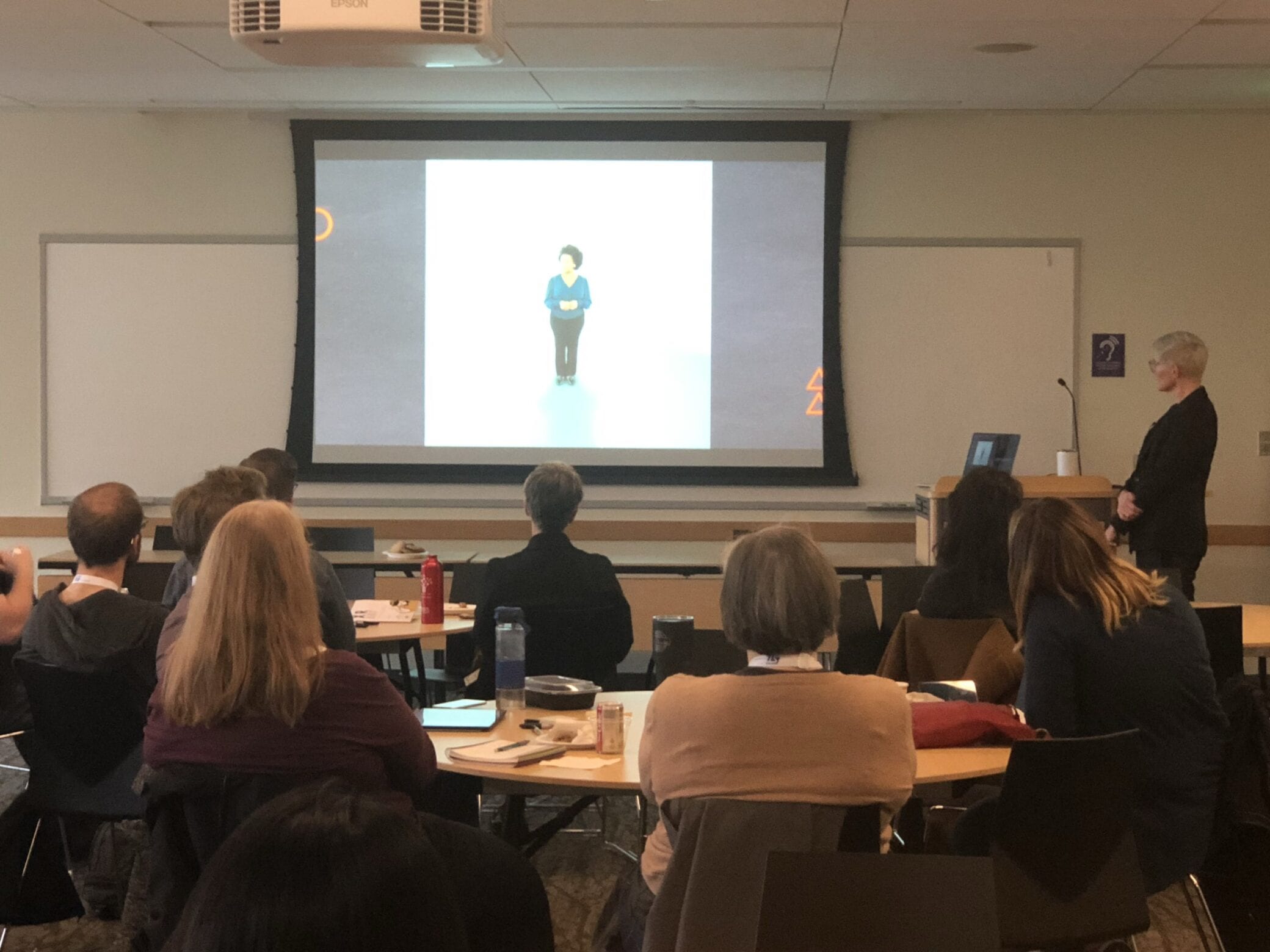

The second batch of breakout sessions at XR Day just started. One of them is looking at how XR can put users “in someone else’s shoes.” Amy Lou Abernethy is currently sharing her professional experience and showing an application that uses 180º videos to immerse the person wearing the head-mounted display into a different perspective. In the part of her presentation dealing with perspective bias, she shows how the application puts users in the position of a 32-year old black woman who is suffering discrimination in the workplace. The technology will never replicate the emotional toll and grave distress that a person must feel in this situation. The video Amy showed was nevertheless highly compelling because it showed that XR could be a great tool in sensitivity training, among other things.

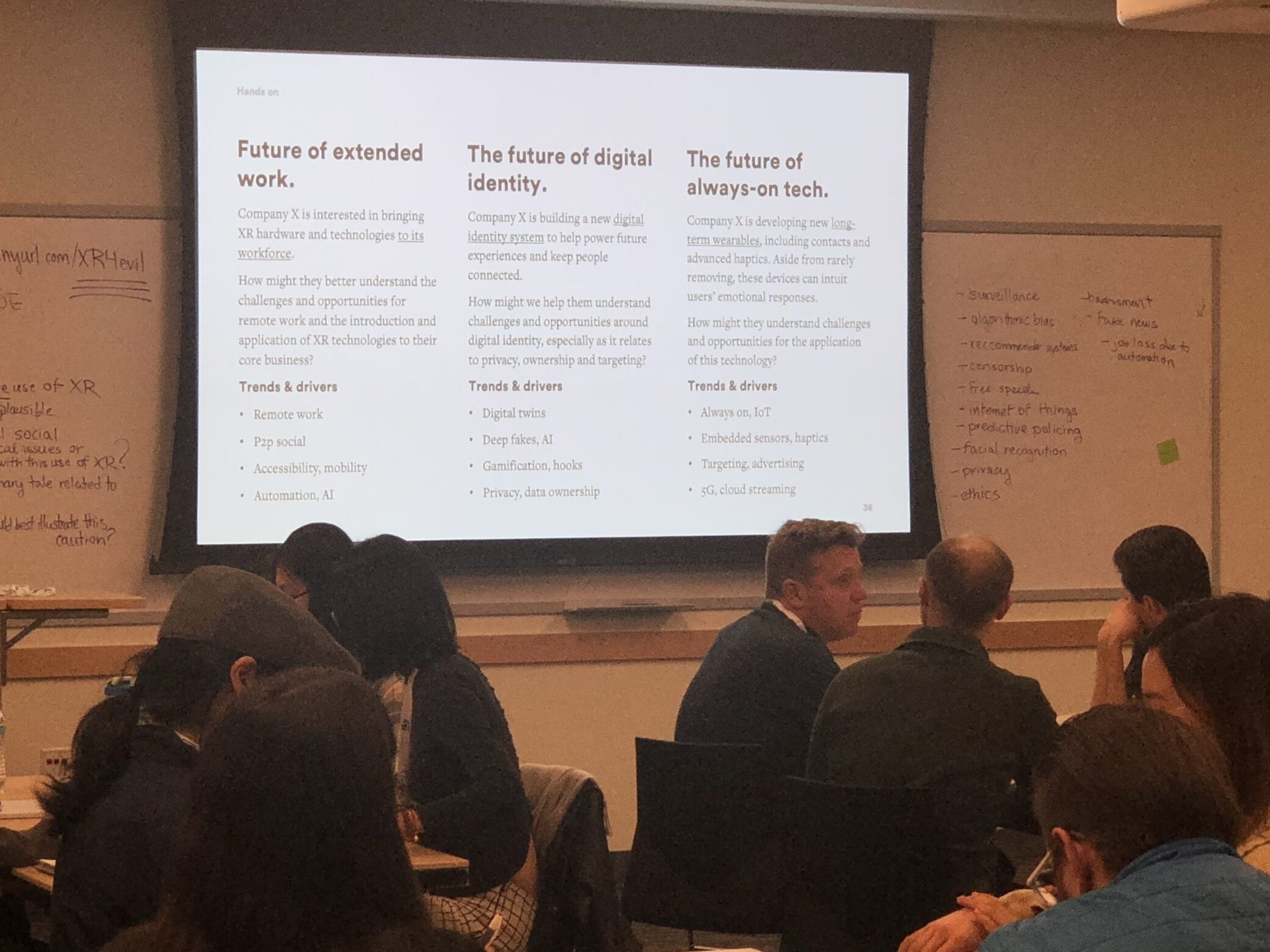

Another breakout session is looking at the next ten years of XR through various group discussions that are analyzing how XR can transform the workplace of tomorrow. The course also looks at how it will affect our sense of identity and our interactions with technologies. The discussions that took place among the groups were meaningful and deep. Ryan Gerber, an educator and technology evangelist guided the attendees into thought-provoking debates and exchanges.

4.35 PM

Chelsea Klukas, Product Design Manager at Oculus, is challenging our conception of the workplace by showing how XR can help us be present while working physically at home. She’s also addressing how VR can transform the way we train employees for complex tasks, some even potentially life threatening if not done correctly. There are technical challenges, such as setting up a large fleet of headsets, but she’s also addressing the current software and solutions that can help companies scale their installations.

5.00 PM

And that’s a wrap! Thank you so much for having followed XR Day with us. Until next time!